Data Mining and Predictive Analytics

Posted on Wed 11 January 2017 in Data-Science

Data Mining and Predictive Analytics

-

Data Mining : The process of discovering useful patterns and trends in large data-sets.

-

Predictive Analytics : The process of extracting information from large data-sets in order to make predictions and estimates about future outcomes.

What is Data Mining?

Example :

Problem : Dell was interested in improving the productivity of its sales workforce.

Analyze database of potential customers in order to identify most likely respondents

Machine Learning :

Statistical learning refers to a vast set of tools for understanding data.

These tools can be classified as

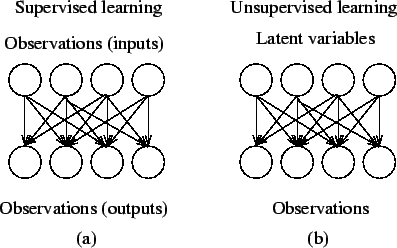

- Supervised : Supervised statistical learning involves building a statistical model for predicting, or estimating, an output based on one or more inputs.

- Unsupervised : There are inputs but no supervising output; nevertheless, we can learn relationships and structure from such data.

Supervised Learning :

When computers are applied to solve a practical problem it is usually the case that the method of deriving the required output from a set of inputs can be described explicitly.

The task of the system designer and eventually the programmer implementing the specifications will be to translate that method into a sequence of instructions which the computer will follow to achieve the desired effect.

Thus, when there is no set method (or no known method) for computing the desired output from a set of inputs, or where that computation may be very expensive.

Example : * Modelling a complex chemical reaction, where the precise interactions of the different reactants are not known, or

-

Classification of protein types based on the DNA sequence from which they are generated, or

-

Classification of credit applications into those who will default and those who will repay.

These tasks cannot be solved by a traditional programming approach since the system designer cannot precisely specify the method by which the correct output can be computed from the input data.

An Alternate Strategy :

The computer can attempt to learn the input/output functionality from examples, in the same way that children learn which are sports cars simply by being told which of a large number of cars are sporty rather than by being given a precise specification of sportiness.

The approach of using examples to synthesize programs is known as the learning methodology, and in the particular case, when the examples are input/output pairs, it is called supervised learning. The examples of input/output functionality are referred to as the training data.

The input/output pairings typically reflect a functional relationship mapping inputs to outputs, though this is not always the case.

-

When the outputs are corrupted by noise.

-

When an underlying function from input to output exists, it is referred to as the target function.

-

The estimate of the target function which is learnt or output by the learning algorithm is known as the solution of the learning problem.

-

In the case of classification, this function is sometimes referred to as the decision function.

-

The solution is chosen from a set of candidate functions which map from the input space to the output domain.

-

Usually, we will choose a particular set or class of candidate functions known as hypotheses before we begin trying to learn the correct function.

-

For example, so-called decision trees are hypotheses created by constructing a binary tree with simple decision functions at the internal nodes and output values at the leaves.

- Hence, we can view the choice of set hypotheses (or hypotheses space) as one of the key ingredients of the learning strategy. The algorithm which takes the training data as input and selects a hypothesis from the hypothesis space is the second important ingredient. It is referred to as learning algorithm.

Classification:

Types of Classification : 1. Binary classification problem : Example : In the case of learning to distinguish sports cars the output is a simple Yes/No tag which we can think of as a binary output value. 2. Multi-Class Classification : Example : For the problem of recognising protein types, the output value will be one of a finite number of categories, While, the output values when modelling a chemical reaction might be the concentrations of the reactants given as real values.

Unsupervised Learning :

A case where there are no output values and the learning task is to gain some understanding of the process that generated the data. This type of learning includes density estimation, learning the support of a distribution, clustering and so on.There are even more complex models. The simplest case is when the learner is allowed to query the environment about the output associated with a particular input. The study of how this affects the learner's ability to learn different tasks is known as query learning. A more complex model of interaction is considered in reinforcement learning, where the learner has a range of actions at their disposal which they can take to attempt to move towards states, where they can expect high rewards. The learning methodology can play a part in reinforcement learning, if we treat the optimal action as the output of a function of the current state of the learner. There are, however, significant complications since the quality of the output can only be assessed indirectly as the consequences of an action become clear.

Basic Difference b/w Supervised and Unsupervised :

Example 1 : Face recognition

- Supervised learning : Learn by examples as to what a face is in terms of structure, color, etc so that after several iterations, it learns to define a face

- Unsupervised learning : Since there is no desired output in this case that is provided therefore categorization is done so that the algorithm differentiates correctly between the face of a horse, cat or human (clustering of data)

Thus, In supervised learning, the output datasets are provided which are used to train the machine and get the desired outputs whereas in unsupervised learning no datasets are provided, instead the given data is clustered into different classes.

Example 2 :

-

Scenario 1: You are a kid, you see different types of animals, your father tells you that this particular animal is a dog...after him giving you tips few times, you see a new type of dog that you never saw before - you identify it as a dog and not as a cat or a monkey or a potato.

-

Scenario 2 : You go bag-packing to a new country, you did not know much about it - their food, culture, language etc. However from day 1, you start making sense there, learning to eat new cuisines, including what not to eat, find a way to that beach etc.

Example 3 :

Supervised Learning:

-

Problem : Given a basket of fruits, arrange same types of fruits in one place.

-

Previous Work : The shape of each and every fruit is known, so it is easy to arrange the same type of fruits at one place.

-

Suppose fruits are : apple,banana,cherry,grape.

-

With the previous work, the shape of each and every fruit so, it is easy to arrange the same type of fruits at one place.

-

Here, the previous work is called as train data in data mining.

-

There's a response variable which tells where the fruit needs to be placed, based on the features of the fruit.

-

This type of data is called the train data.

-

This type of learning is called supervised learning.

Unsupervised Learning:

-

Problem : Given a fruit basket. Arrange the same type of fruits at one place.

-

But, this time, nothing is given (i.e. no previous work). What will be the category of classification?

-

The category would be the physical character of the particular fruit.

-

For example : "Fruit Color"

-

Thus, no training data and no response variable.

-

i.e. Unsupervised Learning.

Applications :

The availability of reliable learning systems is of strategic importance, as there are many tasks that cannot be solved by classical programming techniques, since no mathematical model of the problem is available.

So for example it is not known how to write a computer program to perform hand-written character recognition, though there are plenty of examples available.

It is therefore natural to ask if a computer could be trained to recognize the letter ?A' from examples - after all this is the way humans learn to read. We will refer to this approach to problem solving as the learning methodology The same reasoning applies to the problem of finding genes in a DNA sequence, filtering email, detecting or recognizing objects in machine vision, and so on.

Dataset Examples :

Wage dataset

- In this application, we examine a number of factors that relate to wages for a group of males from the Atlantic region of the United States. In particular, we wish to understand the association between an employee's age and education, as well as the calendar year on his wage.

Analysis :

- Wage increases with age.

- Wage decreases after 60.

- There is a slow but steady increase of approximately \(\\)10,000$ in the average wage between 2003 to 2009.

- Higher the education, better the wage.

Thus, given an employee's age, we can use this curve to predict his wage. However, it is also clear, that there is a significant amount of variability associated with this average value. Thus, age alone is unlikely to provide an accurate prediction of a particular man's age.

We also have information regarding each employee's education level and the year in which the wage was earned.

Wages increase by approximately \(10,000\), in a roughly linear (or straight-line) fashion, between 2003 - 09. Though this rise is very slightly relative to the variability in the data. Wages are also typically greater for individuals with higher education levels.

SVM :

Support Vector Classification :

The aim of Support Vector classification is to devise a computationally efficient way of learning ?good? separating hyperplanes in a high dimensional feature space. Here, : By 'good' hyperplanes we consider the ones optimising the generalisation bounds, and By 'computationally efficient' we mean algorithms able to deal with sample sizes of the order of 100 000 instances.

Pre-requisites : * The generalisation theory gives clear guidance about how to control capacity and hence prevent overfitting by controlling the hyperplane margin measures, while

- Optimisation theory provides the mathematical techniques necessary to find hyperplanes optimising these measures.

Different generalisation bounds exist, motivating different algorithms: one can for example optimise the maximal margin, the margin distribution, the number of support vectors, etc.

Focus : * Reduce the problem to minimising the norm of the weight vector.